When creating a custom vocal preset, am I correct that we should adjust the edits to not total more than 100%?

I have Saros set at 100% Clear and 20% Powerful. My ancient and pre-distorted ears notice it seems a tad overdriven when I go to mix down. Is that just the desired/expected result or should I back off and sum them to 100%? If there is mention in the UM, i missed it.

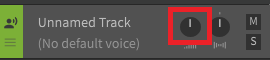

You can go past 100%. In prior versions, there was a way to manually adjust the file so it would go even higher. ![]()

If you’re hearing something like distortion, it may be that the amplitude is too high, and the signal is clipping.

Look at the ,wav file to make sure it’s within range - SynthesizerV doesn’t have a limiter.

If it is, turn down the rendering volume:

Thanks, dcuny. I can take any of those params up to, I believe, 200% but they don’t have a clear explanation of what I’m actually doing… I know some parameters are designed that way, but if I try to extend a note by telling three syllables to each be 125% it cuts off the end of the word (logical) I backed it down to 80/20 and it does seem just a bit milder. I can scope it out for clipping in the DAW, but some over-modulation shows up as harmonic boost. I have an app for that - HarBal - but it’s not for real time. I’ll just keep pluggin’… Thx again.

While an explicit description has never been given by the developers, we have seen it said that settings over 100% might result in more noticeable synthesis artifacts when used with vocal modes that are more “experimental” or which had fewer original recordings in the machine learning dataset (ie the songs recorded during development).

In addition we have some anecdotal observations from when people used to be able to set more “extreme” values (like dcuny mentioned) that caused very obvious flaws, but also had rather exaggerated results.

We also know that this sort of AI model works by attempting to mimic whatever original learning materials were used during development, and that the main way of customizing the output (in a technical sense) is to specify various preferences so that the model treats certain results as more desirable.

From this we can pretty safely draw the conclusion that 100% represents a preference for the original timbre represented by that vocal mode, in a way that tries to mimic the original recordings.

Values beyond 100% indicate some amount of extrapolation beyond what the machine learning algorithms originally observed.

For modes with a lot of original data, this can be done pretty seamlessly, but for more experimental ones like SAROS’s “Resonant” mode, there might not have been enough original recordings to do that extrapolation, resulting in synthesis artefacts or flaws.

It’s pretty amazing that they could analyze and extrapolate ANY useful data of that sort .This does clarify my question, Claire, thank you. I HAVE unearthed a non-issue issue - a probable minor bug that is a feature. I documented it in a separate post with screen shots.